About

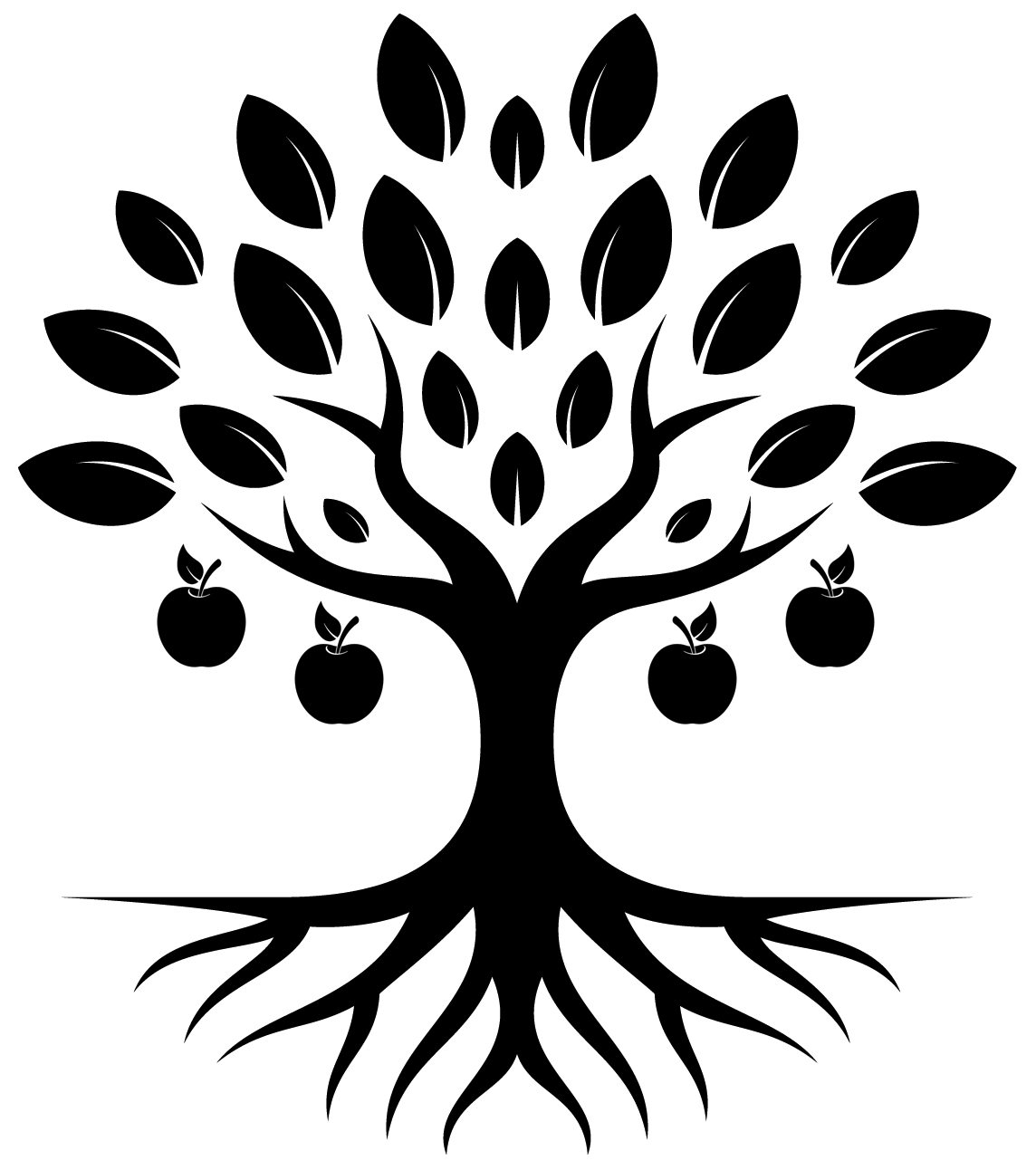

Different people will want to start in different places and may also require different journeys through this tool. The Knowledge Tree can be navigated as a sequential process — from foundational learning to applied case studies — each part of the tree, or the individual elements within it, can also be explored independently. This flexibility allows you to tailor your learning experience to your immediate needs or areas of interest, whether you are seeking a broad understanding of the MoD AI Ethics Principles by starting at the Trunk, or wish to delve into the Leaves to explore some of the specific challenges in applying those Principles into Practice.

The research that contributed to the development of this tool demonstrated that many of the STEM experts that work in this area developing AI systems for the military often have not formally studied ethics before. They may also have little understanding of military organisational values and the impact that these have on shaping the behaviour of those in the military. This is why we have included the Ethics Foundations as the Roots to show how wider ethical thinking, and professional norms, have influenced the AI Ethics Principles we have today. The Fruits of the Tree represent case studies demonstrating how JSP 936 can contribute to structured risk assessment so that risks can be properly assessed, considered and mitigated.

This tool builds on the Military Ethics Education Cards and Apps that were developed by Prof David Whetham, Director of the Centre for Military Ethics at King’s College London. These tools have been translated into 12 languages and have been used successfully by professional militaries around the world to promote ethics discussions and the sharing of best practice. We applied the same methodology here, conducting hundreds of interviews with stakeholders to develop the structure and content for AI developers working with Defence.

We hope that this resource will continue to expand, with the addition of new material, links to useful resources and the addition of appropriately anonymised real world ethical risk assessments in the Fruits section. If you have helpful suggestions or feedback, please contact us on the feedback link below.